活動名稱

【解題達人! We want you!】

活動說明

阿摩站上可謂臥虎藏龍,阿摩發出200萬顆鑽石號召達人們來解題!

針對一些題目可能有疑問但卻缺少討論,阿摩主動幫大家尋找最佳解!

懸賞試題多達20萬題,快看看是否有自己拿手的科目試題,一旦你的回應被選為最佳解,一題即可獲得10顆鑽石。

懸賞時間結束後,只要摩友觀看你的詳解,每次也會得到10顆鑽石喔!

關於鑽石

如何使用:

- ✔懸賞試題詳解

- ✔購買私人筆記

- ✔購買懸賞詳解

- ✔兌換VIP

(1000顆鑽石可換30天VIP) - ✔兌換現金

(50000顆鑽石可換NT$4,000)

如何獲得:

- ✔解答懸賞題目並被選為最佳解

- ✔撰寫私人筆記販售

- ✔撰寫詳解販售(必須超過10讚)

- ✔直接購買 (至站內商城選購)

** 所有鑽石收入,都會有10%的手續費用

近期考題

【非選題】

四、法國雕刻家羅丹(Auguste Rodin,1840-1917)一生創作無數經典名作,其作品具有強大的寫實性與精神性, 《青銅時代》 、 《卡萊市民》 、《巴爾札克 像》 、《沉思者》等作更因為相當逼真,引發使用真實人體澆模製成的疑 慮,請問您如何解讀此種疑慮,並說明原因為何?(25 分)

四、法國雕刻家羅丹(Auguste Rodin,1840-1917)一生創作無數經典名作,其作品具有強大的寫實性與精神性, 《青銅時代》 、 《卡萊市民》 、《巴爾札克 像》 、《沉思者》等作更因為相當逼真,引發使用真實人體澆模製成的疑 慮,請問您如何解讀此種疑慮,並說明原因為何?(25 分)

32. 某公立大學辦理訂有底價之採購,關於底價之訂定,下列何者有誤:

(A)機關首長或其授權人員核定

(B)規劃、設計、需求或使用單位提出預估金額及其分析後由承辦採購單位簽報

(C)應參考圖說、規範、契約,並考量成本、市場行情及政府機關決標資料

(D)開標後可參考廠商標價更改底價

(A)機關首長或其授權人員核定

(B)規劃、設計、需求或使用單位提出預估金額及其分析後由承辦採購單位簽報

(C)應參考圖說、規範、契約,並考量成本、市場行情及政府機關決標資料

(D)開標後可參考廠商標價更改底價

【非選題】

【題組】(三)醋酸被萃取的比例。【10分】

第四題:

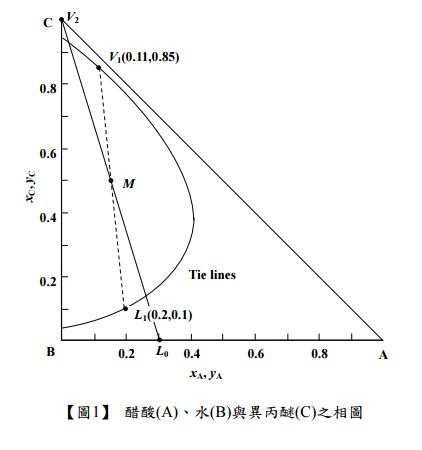

已知有ㄧ瓶含有30 wt%的醋酸水溶液(L0)共計500 kg,和另一瓶為500 kg的純異丙醚(V2),

兩者混合後成為M,水中的醋酸會被異丙醚萃取,而分布在兩液相L1和V1之中。已知醋酸

(A)、

水

(B)與異丙醚

(C)的平衡關係如【圖1】,其中的虛線為通過M的兩相平衡結線。試計算:

【題組】(三)醋酸被萃取的比例。【10分】

【非選題】

I. Paraphrase : Read the following passage and rephrase it in your own words

(while keeping the original meaning) in 250 words.

Social media companies have been under tremendous pressure to do something about the proliferation of misinformation on their platforms. Companies like Facebook and YouTube have responded by applying anti-fake-news strategies that seem as if they would be effective. As a public-relations move, this is smart: The companies demonstrate that they are willing to take action, and the policies sound reasonable to the public.

I. Paraphrase : Read the following passage and rephrase it in your own words

(while keeping the original meaning) in 250 words.

Social media companies have been under tremendous pressure to do something about the proliferation of misinformation on their platforms. Companies like Facebook and YouTube have responded by applying anti-fake-news strategies that seem as if they would be effective. As a public-relations move, this is smart: The companies demonstrate that they are willing to take action, and the policies sound reasonable to the public.

But just because a strategy sounds reasonable doesn't mean it works. Although the platforms are making some progress in their fight against misinformation, recent research by us and other scholars suggests that many of their tactics may be ineffective - and can even make matters worse, leading to confusion, not clarity, about the truth. Social media companies need to empirically investigate whether the concems raised in these experiments are relevant to how their users are processing information on their platforms.

One strategy that platforms have used is to provide more information about the news' source. YouTube has "information panels" that tell users when content was produced by government-funded organizations, and Facebook has a "context" option that provides background information for the sources of articles in its News Feed. This sort of tactic makes intuitive sense because well-established mainstream news sources, though far from perfect, have higher editing and reporting standards than, say, obscure websites that produce fabricated content with no author attribution. But recent research of ours raises questions about the effectiveness of this approach.

We conducted a series of experiments with nearly 7,000 Americans and found that emphasizing sources had virtually no impact on whether people believed news headlines or considered sharing them. People in these experiments were shown a series of headlines that had circulated widely on social media - some of which came from mainstream outlets such as NPR and some from disreputable fringe outlets like the now-defunct newsbreakshere.com. Some participants were provided no information about the publishers, others were shown the domain of the publisher's website, and still others were shown a large banner with the publisher's logo. Perhaps surprisingly, providing the

additional information did not make people much less likely to believe misinformation.

additional information did not make people much less likely to believe misinformation.

The obvious conclusion to draw from all this evidence is that social media platforms should rigorously test their ideas for combating fake news and not just rely on common sense or intuition about what will work. We realize that a more scientific and evidence-based approach takes time. But if these companies show that they are seriously committed to that research - being transparent about any evaluations that they conduct internally and collaborating more with outside independent rescarchers who will publish publicly accessible reports ㅡ the public, for its part, should be prepared to be patient and not demand instant results. Proper oversight of these companies requires not just a timely

response but also an effective one.

Source

New York Times

https://www.nytimes.com/2020/03/24/opinion/fake-news-social-media.html

Adapted from an article by Dr. Pennycook and Dr. Rand on March 24, 2020.

Authors: Gordon Pennycook is an assistant professor at the Hill and Levene Schools of Business at the University of Regina, in Saskatchewan. David Rand is a professor at the Sloam School of Management and in the deparment of brain and cognitive sciences at M.I.T.

response but also an effective one.

Source

New York Times

https://www.nytimes.com/2020/03/24/opinion/fake-news-social-media.html

Adapted from an article by Dr. Pennycook and Dr. Rand on March 24, 2020.

Authors: Gordon Pennycook is an assistant professor at the Hill and Levene Schools of Business at the University of Regina, in Saskatchewan. David Rand is a professor at the Sloam School of Management and in the deparment of brain and cognitive sciences at M.I.T.

45 關於驗光師之法定義務敘述,包括下列那些?①業務文書製作義務 ②對主管機關不得為虛偽之陳述或

報告義務 ③對於因業務而知悉或持有他人秘密,不得無故洩漏義務 ④名稱專用義務

(A)僅①②

(B)僅①③

(C)僅①②③

(D)①②③④

(A)僅①②

(B)僅①③

(C)僅①②③

(D)①②③④